没有合适的资源?快使用搜索试试~ 我知道了~

强化学习中SARSA与Q-learning算法在网格世界的Java实现及其参数优化

1.该资源内容由用户上传,如若侵权请联系客服进行举报

2.虚拟产品一经售出概不退款(资源遇到问题,请及时私信上传者)

2.虚拟产品一经售出概不退款(资源遇到问题,请及时私信上传者)

版权申诉

0 下载量 117 浏览量

2025-01-14

21:05:35

上传

评论

收藏 262KB PDF 举报

温馨提示

内容概要:本课程作业聚焦于CS 457/557的第四次任务,要求学生在一个简单但全面的任务环境中实施基本的强化学习算法——SARSA 和 Q-Learning。通过设置命令行参数来调整初始的学习率(步长)α、政策随机值ε及折扣率γ等关键变量,实现并评估不同策略的效果,最终生成导航策略表。此外,还包括环境细节(如开始位置、障碍物、悬崖、地雷),参数变化规律,输出格式以及额外选项(比如lambda参数用于资格迹追踪以及特征基方法计算状态行动对估计量)。为了确保学术诚信,强调了独立完成作业的必要性并禁止任何形式的作弊行为。 适合人群:本科四年级或研究生一年级以上水平的学生,特别是主修计算机科学并关注机器学习与强化学习的人群。 使用场景及目标:该作业旨在为学员提供实践动手能力,使他们能够深入理解两大经典强化学习算法的基本思想、运行机制、应用场景及其优劣之处。通过设定特定实验条件来观察模型收敛情况及策略表现,帮助学生掌握相关理论知识和技术技能。同时,它也是检验学生对该领域最新进展的认识程度的有效途径。 阅读建议:建议先阅读关于SARSA与Q-Learning的相关资料,然后按部就班进行编码实现。对于每个环节都要仔细推敲参数的意义和调整范围,并记录每一次测试的结果对比分析。由于存在多种可能的方向选择规则,在实现时可以尝试不同的探索方式以找到最佳解决方案。此外,考虑增加额外特性或者将几种改进技术结合起来使用也可能带来意想不到的好效果。

资源推荐

资源详情

资源评论

CS 457/557: Assignment 4

Due: December 11, 2024, by 11:00 pm (Central)

Overview

In this assignment, you will be implementing the SARSA and Q-learning algorithms for a navigation

task in a simple grid world environment.

Academic Integrity Policy

All work for this assignment must be your own work and it must be completed independently.

Using code from other students and/or online sources (e.g., Github) constitutes academic miscon-

duct, as does sharing your code with others either directly or indirectly (e.g., by posting it online).

Academic misconduct is a violation of the UWL Student Honor Code and is unacceptable. Pla-

giarism or cheating in any form may result in a zero on this assignment, a negative score on

this assignment, failure of the course, and/or additional sanctions. Refer to the course syllabus for

additional details on academic misconduct.

You should be able to complete the assignment using only the course notes and textbook along with

relevant programming language documentation (e.g., the Java API specification). Use of additional

resources is discouraged but not prohibited, provided that this is limited to high-level queries and

not assignment-specific concepts. As a concrete example, searching for “how to use a HashMap in

Java” is fine, but searching for “Q-learning in Java” is not.

Deliverables

You should submit a single compressed archive (either .zip or .tgz format) containing the following

to Canvas:

1. The complete source code for your program. I prefer that you use Java in your implemen-

tation, but if you would like to use another language, check with me before you get started.

Additional source code requirements are listed below:

• Your name must be included in a header comment at the top of each source

code file.

• Your code should follow proper software engineering principles for the chosen language,

including meaningful comments and appropriate code style.

• Your code must not make use of any non-standard or third-party libraries.

2. A README text file that provides instructions for how your program can be compiled (as

needed) and run from the command line. If your program is incomplete, then your

README should document what parts of the program are and are not working.

CS 457/557 Assignment 4 Page 2 of 12

Program Requirements

The general outline of the program is given below:

Algorithm 1 Program Flow

Process command-line arguments

Load environment from the specified file

Perform learning episodes, with periodic parameter updates and/or evaluation

Perform final evaluation of pure greedy policy based on learned Q values

Print final learned policy and additional details as appropriate

The program should be runnable from the command line, and it should be able to process command-

line arguments to update various program parameters as needed. The command-line arguments

are listed below:

• -f <FILENAME>: Reads the environment from the file named <FILENAME> (specified as a

String); see the File Format section for more details.

• -a <DOUBLE>: Specifies the (initial) learning rate (step size) α ∈ [0, 1]; default is 0.9.

• -e <DOUBLE>: Specifies the (initial) policy randomness value ϵ ∈ [0, 1]; default is 0.9.

• -g <DOUBLE>: Specifies the discount rate γ ∈ [0, 1] to use during learning; default is 0.9.

• -na <INTEGER>: Specifies the value N

α

which controls the decay of the learning rate (step

size) α; default is 1000.

• -ne <INTEGER>: Specifies the value N

ϵ

which controls the decay of the random action thresh-

old ϵ; default is 200.

• -p <DOUBLE>: Specifies the action success probability p ∈ [0, 1]; default is 0.8.

• -q: Toggles the use of Q-Learning with off-policy updates (instead of SARSA with on-policy

updates, which is the default).

• -T <INTEGER>: Specifies the number of learning episodes (trials) to perform; default is 10000.

• -u: Toggles the use of Unicode characters in printing; disabled by default (see the Output

section for details).

• -v <INTEGER>: Specifies a verbosity level, indicating how much output the program should

produce; default is 1 (See the Output section for details)

The -f <FILENAME> option is required; all others are optional. You can assume that your program

will only be run with valid arguments (so you do not need to include error checking, though it may

be helpful for your own testing). Your program must be able to handle command-line arguments

in any order (e.g., do not assume that the first argument will be -f). Several example runs of the

program are shown at the end of this document.

Extra credit: Any program that includes support for one or both of the following options will be

eligible for a small amount of extra credit (see page 10 for details):

• -l <DOUBLE>: Specifies the λ parameter for eligibility trace decay; default is 0.0 (meaning

that eligibility traces should not be used by default).

• -w: Specifies that the agent should use a weighted sum of features to estimate Q values for

each state-action pair instead of maintaining these values in a table; disabled by default.

CS 457/557 Assignment 4 Page 3 of 12

Environment Details

The environment in which the agent operates consists of a rectangular grid of cells, with each cell

belonging to one of the following types:

• S indicates the start cell for an agent

• G indicates a goal cell

• indicates an empty cell

• B indicates a block cell

• M indicates an explosive mine cell

• C indicates a cliff cell

In an environment, the agent begins in the start cell (S), and can choose to move in one of four

directions (up, down, left, and right). Goal (G) and mine (M) cells are terminal states, which means

no further action is possible upon reaching those states. Cliff (C) cells are “restart” cells: any action

taken in a cliff cell causes the agent to return to the start cell for the next step. Block (B) cells are

obstacles that the agent must circumvent (i.e., the agent cannot enter a block cell).

In all other types of cells, any attempt at movement either succeeds as intended with some proba-

bility p ∈ [0, 1] or results in movement plus drift with probability 1 − p. The drift is perpendicular

to the intended direction of movement, and each drift direction is equally likely. For example, in

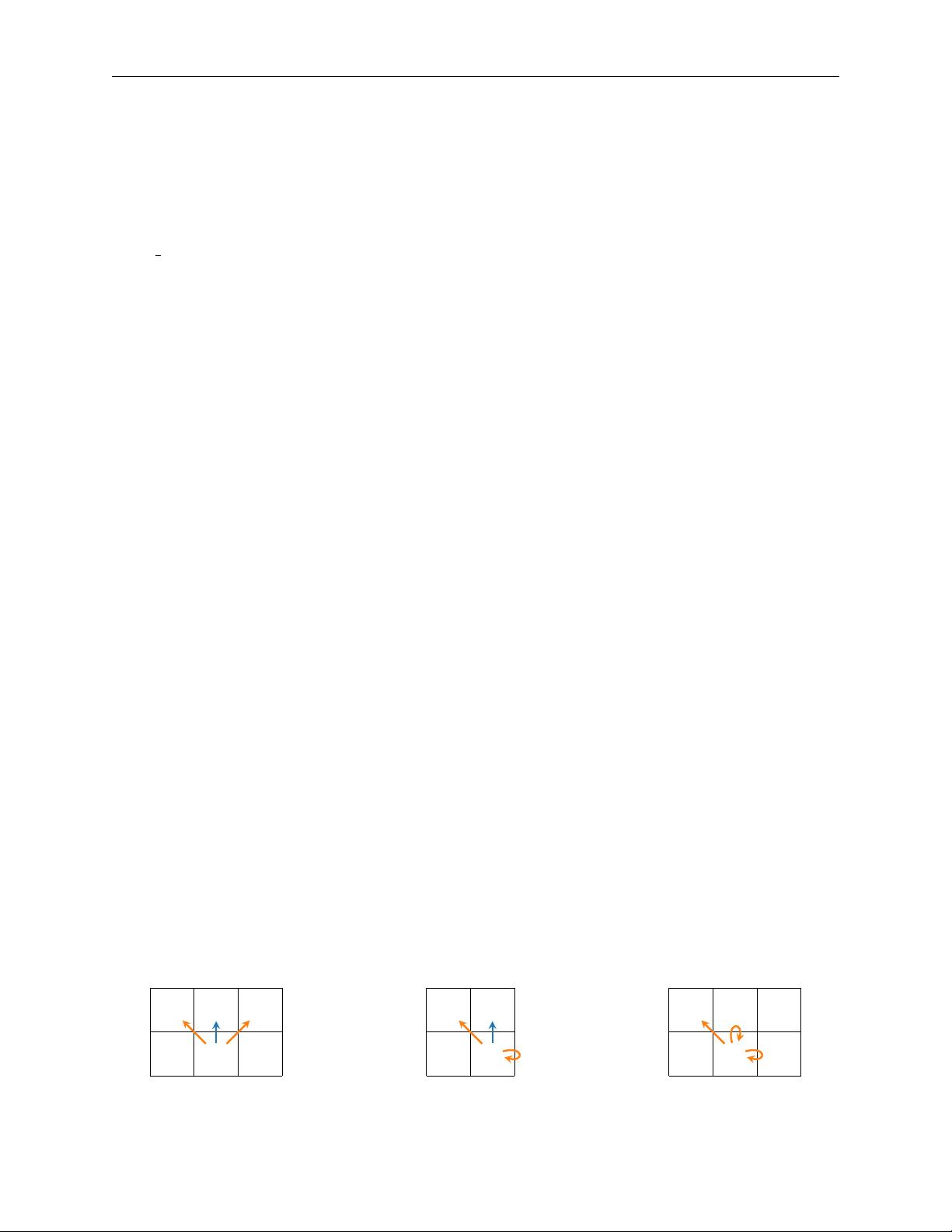

Figure 1 below, the agent is in the cell marked X and is attempting to move up to the cell marked

T. The following outcomes are possible:

• the agent moves up to enter cell T as intended with probability p;

• the agent moves up and drifts left to enter cell L with probability (1 − p)/2;

• the agent moves up and drifts right to enter cell R with probability (1 − p)/2.

Any movement (including movement plus drift) that would cause the agent to leave the bounds of

the grid or enter a block cell (B) will fail and result in the agent staying in its current location. For

example, in Figure 2 below, the agent is at the right edge of the environment and is attempting to

move up to the cell marked T. The following outcomes are possible:

• the agent moves up to enter cell T as intended with probability p;

• the agent moves up and drifts left to enter cell L with probability (1 − p)/2;

• the agent agent remains in place with probability (1 − p)/2 because moving up and drifting

right would take the agent out of bounds.

Figure 3 below shows how block cells influence outcomes. The agent is again in the cell marked

X and attempting to move up. The only movement that can occur is moving up and drifting left

(with probability (1−p)/2); moving up without drift and moving up with rightward drift both lead

to block cells which the agent cannot enter, so these outcomes result in no movement. (Note that

the possibility of drift allows the agent to move on the diagonal in an indirect way; this can lead

to some interesting “wall-hack” behavior that allows the agent to squeeze through gaps between

two block cells that are adjacent to the agent’s current location.)

X

TL R

Figure 1

X

TL

Figure 2

X

BL B

Figure 3

剩余11页未读,继续阅读

资源评论

pk_xz123456

- 粉丝: 2966

- 资源: 4150

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 燃料电池功率跟随cruise仿真模型 此模型基于Cruise2019版及Matlab2018a搭建调试而成,跟随效果很好,任务仿真结束起始soc几乎相同 控制模型主要包括燃料堆控制、DCDC控制

- carsim与simulink联合仿真(6)-轨迹跟随,车道保持,横向控制,多点预瞄算法 提供carsim的cpar文件导入即可使用 提供simulink的mdl模型文件支持自己修改 提供模型说明文

- dsp28335串口升级方案 提供bootloader源代码,用户工程源代码,上位机以及上位机源代码 提供使用说明,通信协议

- C#读写西门子PLC.OPC.数据库.Socket 1、PLC数据通信读写; 2、联合OPC; 3、联合Socket; 4、联合数据库;

- matlab simulink一阶倒立摆仿真,二阶倒立摆 pid 模糊pid 最优控制 LQE控制 神经网络 运行结果如图

- 信捷PLC,XDC总线运动控制轴配置函数块,封装版无限使用 使用感受 自己做的,一直再用,功能块一个函数块轴使能状态,轴报警状态,让你编程节奏

- 有感步进电机 SSD2505 方案

- 基于西门子200smart系列化工反应釜程序,该程序仅用于学习探讨 功能: 1、系统进行两路PID恒温升压调节 ; 2、两路PID手自动切; 3、压力、温度等检测 具有如下控制: 参数设置、报警查

- 新能源电动汽车整车控制器VCU程序原理图PCB图控制策略

- 自抗扰控制ADRC 电机控制仿真 1.通过输入和输出信号估计扰动,抗扰动能力强; 2.响应速度快,无静态误差; 3.具有专门为提炼微分信号跟踪微分器;

- 威纶通触摸屏与三菱变频器modbus通讯 威纶通与三菱变频器直接相连,进行modbus通讯,程序可以帮你学会触摸屏直连的modbus通讯,是程序,接线,参数调试,说明书,拿过来直接可以用

- 三菱FX3U-485-ADP和温控器modbus通信

- MOSMA,SMA多目标黏菌算法MOSMA, SMA优化支持向量机SVM,优化参数包括惩罚参数c和核函数参数g 程序简洁易懂,每个功能块都进行了封装 只需要替成自己的数据就可以使用,可以用来做预测

- 双向DC- DC,基于PWM的DC-DC Boost变器系统中基于非线性干扰观测器的滑模控制,滑模控制SMC,扰动观测器NDOB EI期刊复现,各个波形与文章匹配

- MATLAB代码:基于多目标粒子群算法冷热电联供综合能源系统运行优化 关键词:综合能源 冷热电三联供 粒子群算法 多目标优化 参考文档:《基于多目标算法的冷热电联供型综合能源系统运行优化》 仿真平台

- 基于S7-200 PLC和组态王组态污水处理控制系统 带解释的梯形图程序,接线图原理图图纸,io分配,组态画面

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功