馃摎 This guide explains how to use **Weights & Biases** (W&B) with YOLOv5 馃殌. UPDATED 29 September 2021.

- [About Weights & Biases](#about-weights-&-biases)

- [First-Time Setup](#first-time-setup)

- [Viewing runs](#viewing-runs)

- [Disabling wandb](#disabling-wandb)

- [Advanced Usage: Dataset Versioning and Evaluation](#advanced-usage)

- [Reports: Share your work with the world!](#reports)

## About Weights & Biases

Think of [W&B](https://wandb.ai/site?utm_campaign=repo_yolo_wandbtutorial) like GitHub for machine learning models. With a few lines of code, save everything you need to debug, compare and reproduce your models 鈥� architecture, hyperparameters, git commits, model weights, GPU usage, and even datasets and predictions.

Used by top researchers including teams at OpenAI, Lyft, Github, and MILA, W&B is part of the new standard of best practices for machine learning. How W&B can help you optimize your machine learning workflows:

- [Debug](https://wandb.ai/wandb/getting-started/reports/Visualize-Debug-Machine-Learning-Models--VmlldzoyNzY5MDk#Free-2) model performance in real time

- [GPU usage](https://wandb.ai/wandb/getting-started/reports/Visualize-Debug-Machine-Learning-Models--VmlldzoyNzY5MDk#System-4) visualized automatically

- [Custom charts](https://wandb.ai/wandb/customizable-charts/reports/Powerful-Custom-Charts-To-Debug-Model-Peformance--VmlldzoyNzY4ODI) for powerful, extensible visualization

- [Share insights](https://wandb.ai/wandb/getting-started/reports/Visualize-Debug-Machine-Learning-Models--VmlldzoyNzY5MDk#Share-8) interactively with collaborators

- [Optimize hyperparameters](https://docs.wandb.com/sweeps) efficiently

- [Track](https://docs.wandb.com/artifacts) datasets, pipelines, and production models

## First-Time Setup

<details open>

<summary> Toggle Details </summary>

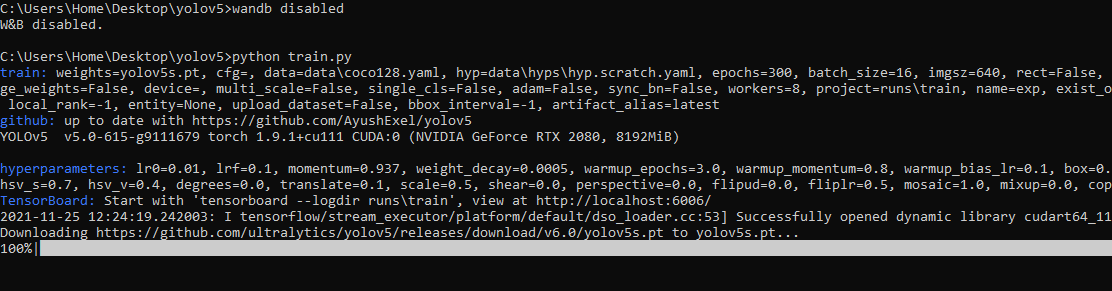

When you first train, W&B will prompt you to create a new account and will generate an **API key** for you. If you are an existing user you can retrieve your key from https://wandb.ai/authorize. This key is used to tell W&B where to log your data. You only need to supply your key once, and then it is remembered on the same device.

W&B will create a cloud **project** (default is 'YOLOv5') for your training runs, and each new training run will be provided a unique run **name** within that project as project/name. You can also manually set your project and run name as:

```shell

$ python train.py --project ... --name ...

```

YOLOv5 notebook example: <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a> <a href="https://www.kaggle.com/ultralytics/yolov5"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>

<img width="960" alt="Screen Shot 2021-09-29 at 10 23 13 PM" src="https://user-images.githubusercontent.com/26833433/135392431-1ab7920a-c49d-450a-b0b0-0c86ec86100e.png">

</details>

## Viewing Runs

<details open>

<summary> Toggle Details </summary>

Run information streams from your environment to the W&B cloud console as you train. This allows you to monitor and even cancel runs in <b>realtime</b> . All important information is logged:

- Training & Validation losses

- Metrics: Precision, Recall, mAP@0.5, mAP@0.5:0.95

- Learning Rate over time

- A bounding box debugging panel, showing the training progress over time

- GPU: Type, **GPU Utilization**, power, temperature, **CUDA memory usage**

- System: Disk I/0, CPU utilization, RAM memory usage

- Your trained model as W&B Artifact

- Environment: OS and Python types, Git repository and state, **training command**

<p align="center"><img width="900" alt="Weights & Biases dashboard" src="https://user-images.githubusercontent.com/26833433/135390767-c28b050f-8455-4004-adb0-3b730386e2b2.png"></p>

</details>

## Disabling wandb

- training after running `wandb disabled` inside that directory creates no wandb run

- To enable wandb again, run `wandb online`

## Advanced Usage

You can leverage W&B artifacts and Tables integration to easily visualize and manage your datasets, models and training evaluations. Here are some quick examples to get you started.

<details open>

<h3> 1: Train and Log Evaluation simultaneousy </h3>

This is an extension of the previous section, but it'll also training after uploading the dataset. <b> This also evaluation Table</b>

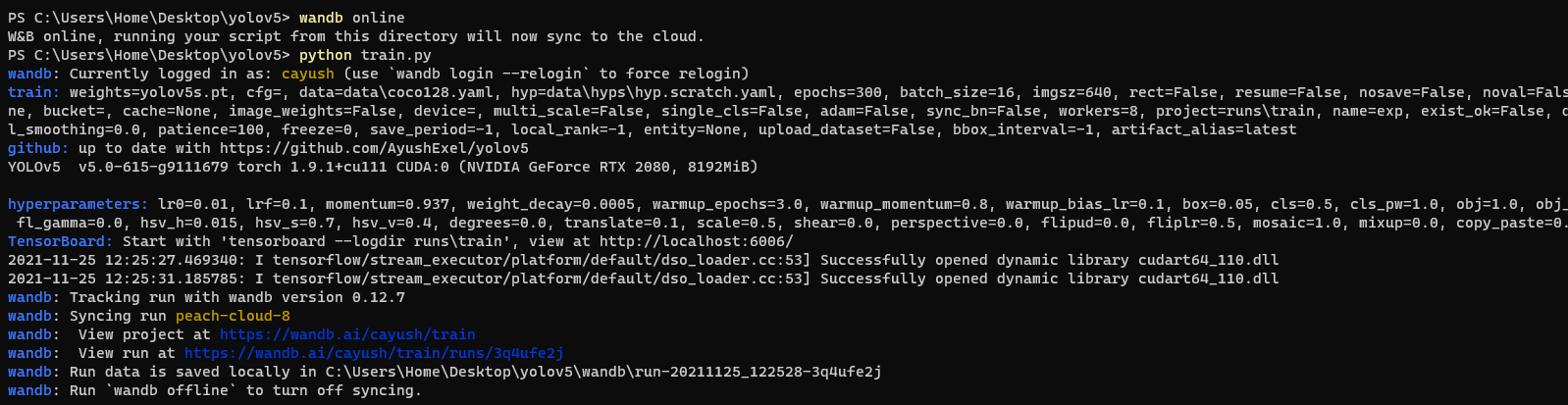

Evaluation table compares your predictions and ground truths across the validation set for each epoch. It uses the references to the already uploaded datasets,

so no images will be uploaded from your system more than once.

<details open>

<summary> <b>Usage</b> </summary>

<b>Code</b> <code> $ python train.py --upload_data val</code>

</details>

<h3>2. Visualize and Version Datasets</h3>

Log, visualize, dynamically query, and understand your data with <a href='http://222.178.203.72:19005/whst/63/=cnbrzvZmcazZh//guides/data-vis/tables'>W&B Tables</a>. You can use the following command to log your dataset as a W&B Table. This will generate a <code>{dataset}_wandb.yaml</code> file which can be used to train from dataset artifact.

<details>

<summary> <b>Usage</b> </summary>

<b>Code</b> <code> $ python utils/logger/wandb/log_dataset.py --project ... --name ... --data .. </code>

</details>

<h3> 3: Train using dataset artifact </h3>

When you upload a dataset as described in the first section, you get a new config file with an added `_wandb` to its name. This file contains the information that

can be used to train a model directly from the dataset artifact. <b> This also logs evaluation </b>

<details>

<summary> <b>Usage</b> </summary>

<b>Code</b> <code> $ python train.py --data {data}_wandb.yaml </code>

</details>

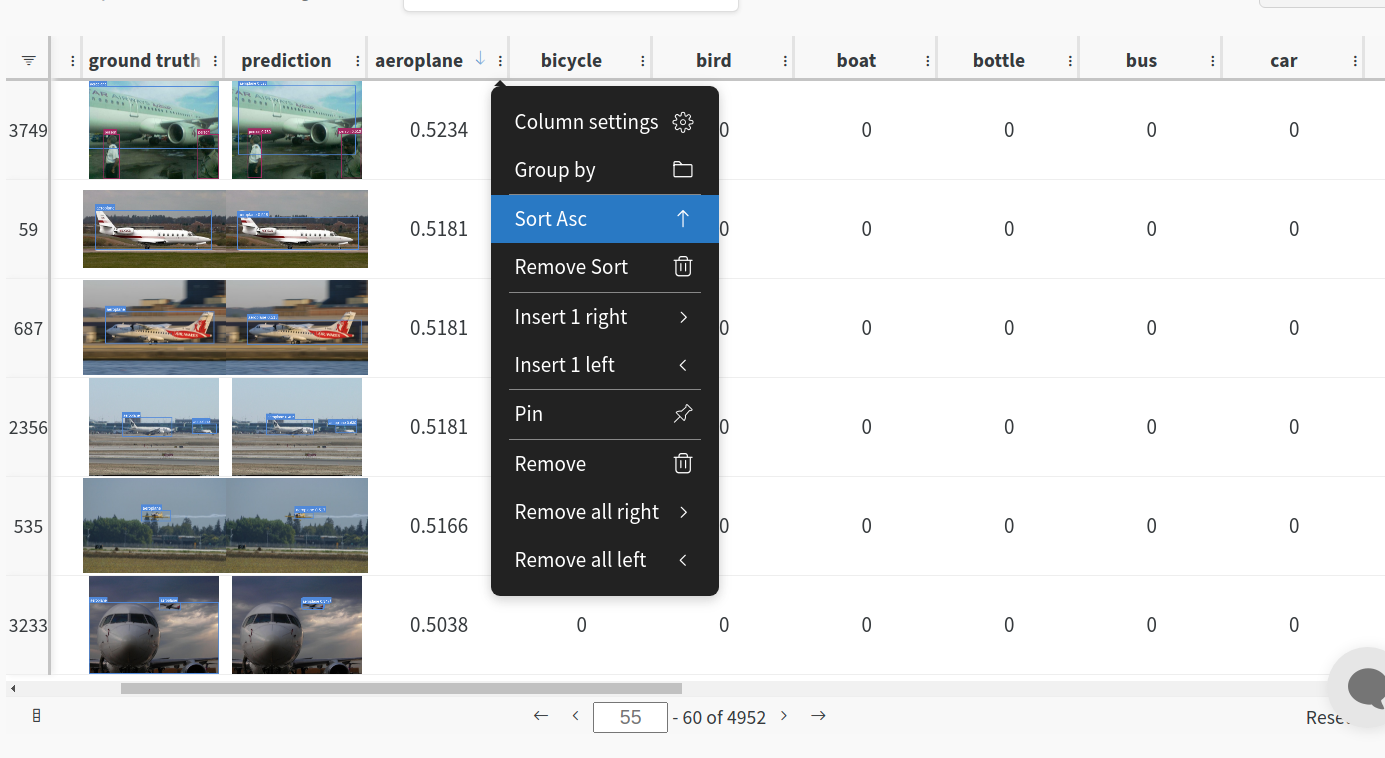

<h3> 4: Save model checkpoints as artifacts </h3>

To enable saving and versioning checkpoints of your experiment, pass `--save_period n` with the base cammand, where `n` represents checkpoint interval.

You can also log both the dataset and model checkpoints simultaneously. If not passed, only the final model will be logged

<details>

<summary> <b>Usage</b> </summary>

<b>Code</b> <code> $ python train.py --save_period 1 </code>

</details>

</details>

<h3> 5: Resume runs from checkpoint artifacts. </h3>

Any run can be resumed using artifacts if the <code>--resume</code> argument starts with聽<code>wandb-artifact://</code>聽prefix followed by the run path, i.e,聽<code>wandb-artifact://username/project/runid </code>. This doesn't require the model checkpoint to be present on the local system.

<details>

<summary> <b>Usage</b> </summary>

<b>Code</b> <code> $ python train.py --resume wandb-artifact://{run_path} </code>

</details>

<h3> 6: Resume runs from dataset artifact & checkpoint artifacts. </h3>

<b> Local dataset or model checkpoints are not required. This can be used to resume runs directly on a different device </b>

The syntax is same as the previous section, but you'll need to lof both the dataset and model checkpoints as artifacts, i.e, set bot <code>--upload_dataset</code> or

train fro

没有合适的资源?快使用搜索试试~ 我知道了~

温馨提示

基于yolov5的跌到检测项目源码+数据集(95分以上期末大作业).zip 该项目是个人大作业项目源码,评审分达到95分以上,都经过严格调试,确保可以运行!放心下载使用。 基于yolov5的跌到检测项目源码+数据集(95分以上期末大作业).zip 该项目是个人大作业项目源码,评审分达到95分以上,都经过严格调试,确保可以运行!放心下载使用。 基于yolov5的跌到检测项目源码+数据集(95分以上期末大作业).zip 该项目是个人大作业项目源码,评审分达到95分以上,都经过严格调试,确保可以运行!放心下载使用。 基于yolov5的跌到检测项目源码+数据集(95分以上期末大作业).zip 该项目是个人大作业项目源码,评审分达到95分以上,都经过严格调试,确保可以运行!放心下载使用。 基于yolov5的跌到检测项目源码+数据集(95分以上期末大作业).zip 该项目是个人大作业项目源码,评审分达到95分以上,都经过严格调试,确保可以运行!放心下载使用。 基于yolov5的跌到检测项目源码+数据集(95分以上期末大作业).zip 该项目是个人大作业项目源码,评审

资源推荐

资源详情

资源评论

收起资源包目录

基于yolov5的跌到检测项目源码+数据集(95分以上期末大作业).zip (187个子文件)

基于yolov5的跌到检测项目源码+数据集(95分以上期末大作业).zip (187个子文件)  setup.cfg 2KB

setup.cfg 2KB Dockerfile 2KB

Dockerfile 2KB Dockerfile 821B

Dockerfile 821B Dockerfile-arm64 2KB

Dockerfile-arm64 2KB Dockerfile-cpu 2KB

Dockerfile-cpu 2KB .dockerignore 4KB

.dockerignore 4KB tutorial.ipynb 56KB

tutorial.ipynb 56KB .keep 0B

.keep 0B README.md 11KB

README.md 11KB CONTRIBUTING.md 5KB

CONTRIBUTING.md 5KB README.md 2KB

README.md 2KB README.md 1KB

README.md 1KB fall(191).png 142KB

fall(191).png 142KB fall(190).png 142KB

fall(190).png 142KB fall(189).png 142KB

fall(189).png 142KB fall(192).png 142KB

fall(192).png 142KB fall(194).png 142KB

fall(194).png 142KB fall(195).png 142KB

fall(195).png 142KB fall(193).png 142KB

fall(193).png 142KB fall(188).png 142KB

fall(188).png 142KB fall(198).png 142KB

fall(198).png 142KB stand(204).png 140KB

stand(204).png 140KB stand(201).png 140KB

stand(201).png 140KB stand(200).png 140KB

stand(200).png 140KB sit(55).png 140KB

sit(55).png 140KB sit(46).png 140KB

sit(46).png 140KB sit(47).png 140KB

sit(47).png 140KB stand(315).png 132KB

stand(315).png 132KB stand(314).png 132KB

stand(314).png 132KB stand(313).png 131KB

stand(313).png 131KB stand(252).png 130KB

stand(252).png 130KB stand(251).png 130KB

stand(251).png 130KB stand(250).png 129KB

stand(250).png 129KB sit(83).png 129KB

sit(83).png 129KB sit(81).png 129KB

sit(81).png 129KB sit(79).png 129KB

sit(79).png 129KB sit(82).png 129KB

sit(82).png 129KB sit(80).png 129KB

sit(80).png 129KB yolov5m.pt 27.5MB

yolov5m.pt 27.5MB dataloaders.py 46KB

dataloaders.py 46KB datasets.py 46KB

datasets.py 46KB general.py 41KB

general.py 41KB common.py 35KB

common.py 35KB train.py 34KB

train.py 34KB export.py 29KB

export.py 29KB wandb_utils.py 27KB

wandb_utils.py 27KB tf.py 25KB

tf.py 25KB plots.py 21KB

plots.py 21KB val.py 19KB

val.py 19KB yolo.py 15KB

yolo.py 15KB metrics.py 14KB

metrics.py 14KB detect.py 14KB

detect.py 14KB torch_utils.py 13KB

torch_utils.py 13KB augmentations.py 12KB

augmentations.py 12KB loss.py 10KB

loss.py 10KB __init__.py 8KB

__init__.py 8KB autoanchor.py 7KB

autoanchor.py 7KB downloads.py 7KB

downloads.py 7KB hubconf.py 6KB

hubconf.py 6KB benchmarks.py 6KB

benchmarks.py 6KB experimental.py 4KB

experimental.py 4KB activations.py 3KB

activations.py 3KB text_to_yolo.py 2KB

text_to_yolo.py 2KB text_to_yolo.py 2KB

text_to_yolo.py 2KB callbacks.py 2KB

callbacks.py 2KB autobatch.py 2KB

autobatch.py 2KB split_train_val.py 1KB

split_train_val.py 1KB split_train_val.py 1KB

split_train_val.py 1KB restapi.py 1KB

restapi.py 1KB sweep.py 1KB

sweep.py 1KB resume.py 1KB

resume.py 1KB __init__.py 1KB

__init__.py 1KB log_dataset.py 1KB

log_dataset.py 1KB xg.py 648B

xg.py 648B xg.py 648B

xg.py 648B example_request.py 368B

example_request.py 368B __init__.py 0B

__init__.py 0B __init__.py 0B

__init__.py 0B __init__.py 0B

__init__.py 0B dataloaders.cpython-310.pyc 36KB

dataloaders.cpython-310.pyc 36KB dataloaders.cpython-39.pyc 36KB

dataloaders.cpython-39.pyc 36KB datasets.cpython-37.pyc 35KB

datasets.cpython-37.pyc 35KB general.cpython-310.pyc 34KB

general.cpython-310.pyc 34KB general.cpython-39.pyc 34KB

general.cpython-39.pyc 34KB general.cpython-37.pyc 33KB

general.cpython-37.pyc 33KB common.cpython-39.pyc 31KB

common.cpython-39.pyc 31KB common.cpython-310.pyc 31KB

common.cpython-310.pyc 31KB common.cpython-37.pyc 30KB

common.cpython-37.pyc 30KB export.cpython-39.pyc 22KB

export.cpython-39.pyc 22KB wandb_utils.cpython-310.pyc 19KB

wandb_utils.cpython-310.pyc 19KB wandb_utils.cpython-39.pyc 19KB

wandb_utils.cpython-39.pyc 19KB wandb_utils.cpython-37.pyc 19KB

wandb_utils.cpython-37.pyc 19KB plots.cpython-310.pyc 18KB

plots.cpython-310.pyc 18KB plots.cpython-39.pyc 18KB

plots.cpython-39.pyc 18KB plots.cpython-37.pyc 18KB

plots.cpython-37.pyc 18KB val.cpython-37.pyc 14KB

val.cpython-37.pyc 14KB val.cpython-310.pyc 13KB

val.cpython-310.pyc 13KB val.cpython-39.pyc 13KB

val.cpython-39.pyc 13KB yolo.cpython-310.pyc 13KB

yolo.cpython-310.pyc 13KB yolo.cpython-39.pyc 13KB

yolo.cpython-39.pyc 13KB共 187 条

- 1

- 2

资源评论

m0_738763822024-11-09非常有用的资源,可以直接使用,对我很有用,果断支持!

m0_738763822024-11-09非常有用的资源,可以直接使用,对我很有用,果断支持! Felixixxxx2023-11-28资源内容详细,总结地很全面,与描述的内容一致,对我启发很大,学习了。

Felixixxxx2023-11-28资源内容详细,总结地很全面,与描述的内容一致,对我启发很大,学习了。

盈梓的博客

- 粉丝: 9954

- 资源: 2687

下载权益

C知道特权

VIP文章

课程特权

开通VIP

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜最新资源

- 基于Arduino设计的直流电压表毕业项目,原理图、源码-电路方案

- 基于深度学习和单目摄像头测距的前车碰撞预警系统源码详解(附设计文档),基于深度学习和单目摄像头测距的前车碰撞预警系统源码详解(GPU与CPU版本),前车碰撞预警-FCW,基于深度学习和单目摄像头测距

- RedPanda.C .3.2.win64.MinGW64-11.4.zip

- unixbench自动化脚本

- 毕业设计基于Python的Django-html基于知识图谱电影推荐问答系统源码(完整前后端+mysql+说明文档+LW+PPT).zip

- 青海统计年鉴2000-2020年

- 价值19800的影视视频微信小程序源码-自带支付通道带采集+搭建教程

- mingw-w64-x86-64-gmp-6.3.0-2-any.pkg.zip

- lua基础编程,包括lua的基本类型,语法,table,面向对象,协程,和c api

- 扫地机器人路径规划中遗传算法的应用与MATLAB实现-遗传算法-路径规划-扫地机器人-优化搜索-matlab

- 软考初级程序员 C程序设计(一)ppt

- spec2017工具cfg配置文件

- ltp20240524工具

- 西门子PLC STEP7编程软件梯形图与昆仑通态触摸屏电锅炉峰谷电供热系统全方案,CAD原理图全套包装解析,西门子PLC与昆仑通态触摸屏联控电锅炉供热系统:峰电供热与谷电蓄热水箱全自动蓄能程序CAD原

- HFI脉振方波高频注入与增强滑膜esmo代码:含原厂文档,TI与ST移植方式,学习资料大放送,HFI脉振方波高频注入与增强滑膜esmo代码:含原厂文档,可移植使用,支持TI与ST方式,HFI脉振方波高

- 2025年2月28日PPTX

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功