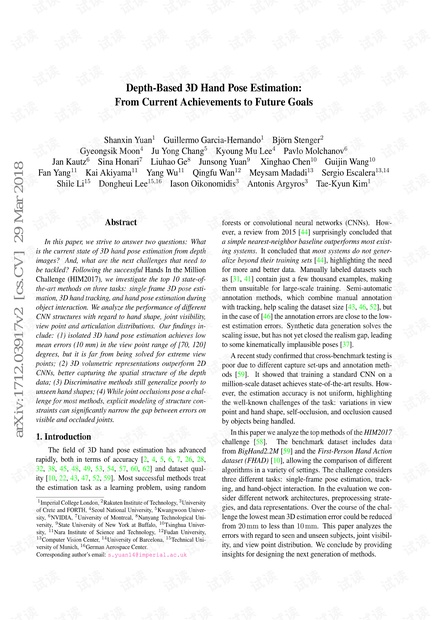

Figure 1: Evaluated tasks. For each scenario the goal is to infer the 3D locations of the 21 hand joints from a depth image. In Single

frame pose estimation (left) and the Interaction task (right), each frame is annotated with a bounding box. In the Tracking task (middle),

only the first frame of each sequence is fully annotated.

Related work. Public benchmarks and challenges in

other areas such as ImageNet [35] for scene classification

and object detection, PASCAL [9] for semantic and ob-

ject segmentation, and the VOT challenge [19] for object

tracking, have been instrumental in driving progress in their

respective field. In the area of hand tracking, the review

from 2007 by Erol et al. [8] proposed a taxonomy of ap-

proaches. Learning-based approaches have been found ef-

fective for solving single-frame pose estimation, optionally

in combination with hand model fitting for higher precision,

e.g., [50]. The review by Supancic et al. [44] compared 13

methods on a new dataset and concluded that deep models

are well-suited to pose estimation [44]. It also highlighted

the need for large-scale training sets in order to train mod-

els that generalize well. In this paper we extend the scope

of previous analyses by comparing deep learning methods

on a large-scale dataset, carrying out a fine-grained analysis

of error sources and different design choices.

2. Evaluation tasks

We evaluate three different tasks on a dataset containing

over a million annotated images using standardized evalu-

ation protocols. Benchmark images are sampled from two

datasets: BigHand2.2M [59] and First-Person Hand Action

dataset (FHAD) [10]. Images from BigHand2.2M cover a

large range of hand view points (including third-person and

first-person views), articulated poses, and hand shapes. Se-

quences from the FHAD dataset are used to evaluate pose

estimation during hand-object interaction. Both datasets

contain 640 × 480-pixel depth maps with 21 joint anno-

tations, obtained from magnetic sensors and inverse kine-

matics. The 2D bounding boxes have an average diagonal

length of 162.4 pixels with a standard deviation of 40.7 pix-

els. The evaluation tasks are 3D single hand pose estima-

tion, i.e., estimating the 3D locations of 21 joints, from (1)

individual frames, (2) video sequences, given the pose in

the first frame, and (3) frames with object interaction, e.g.,

with a juice bottle, a salt shaker, or a milk carton. See Fig-

ure 1 for an overview. Bounding boxes are provided as input

for tasks (1) and (3). The training data is sampled from the

BigHand2.2M dataset and only the interaction task uses test

data from the FHAD dataset. See Table 1 for dataset sizes

and the number of total and unseen subjects for each task.

Number of Train Test Test Test

single track interact

frames 957K 295K 294K 2,965

subjects (unseen) 5 10 (5) 10 (5) 2 (0)

Table 1: Data set sizes and number of subjects.

3. Evaluated methods

We evaluate the top 10 among 17 participating meth-

ods [58]. Table 2 lists the methods with some of their key

properties. We also indirectly evaluate DeepPrior [29] and

REN [15], which are components of rvhand [1], as well as

DeepModel [61], which is the backbone of LSL [20]. We

group methods based on different design choices.

2D CNN vs. 3D CNN. 2D CNNs have been popular for

3D hand pose estimation [1, 3, 14, 15, 20, 21, 23, 29, 57,

61]. Common pre-processing steps include cropping and

resizing the hand volume by normalizing the depth values

to [-1, 1]. Recently, several methods have used a 3D CNN

[12, 24, 56], where the input can be a 3D voxel grid [24, 56],

or a projective D-TSDF volume [12]. Ge et al. [13] project

the depth image onto three orthogonal planes and train a

2D CNN for each projection, then fusing the results. In

[12] they propose a 3D CNN by replacing 2D projections

with a 3D volumetric representation (projective D-TSDF

volumes [40]). In the HIM2017 challenge [58], they ap-

ply a 3D deep learning method [11], where the inputs are

3D points and surface normals. Moon et al. [24] propose

a 3D CNN to estimate per-voxel likelihoods for each hand

joint. NAIST RV [56] proposes a 3D CNN with a hierarchi-

cal branch structure, where the input is a 50

3

-voxel grid.

Detection-based vs. Regression-based. Detection-

based methods [23, 24] produce a probability density map

for each joint. The method of RCN-3D [23] is an RCN+ net-

work [17], based on Recombinator Networks (RCN) [18]

with 17 layers and 64 output feature maps for all layers ex-

cept the last one, which outputs a probability density map

for each of the 21 joints. V2V-PoseNet [24] uses a 3D

CNN to estimate per-voxel likelihood of each joint, and a

CNN to estimate the center of mass from the cropped depth

map. For training, 3D likelihood volumes are generated by

placing normal distributions at the locations of hand joints.

Regression-based methods [1, 3, 11, 14, 20, 21, 29, 56]

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

前往需求广场,查看用户热搜

前往需求广场,查看用户热搜

信息提交成功

信息提交成功